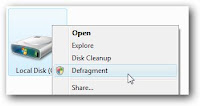

How to add

defragment option in drive right click menu

We do many work on our hard drive but we do

not remember to defragment that because the defragment

process is so lazy to find the option and the process also. But now we add the defragment option on every drive right click menu then you not need to find the option.

process is so lazy to find the option and the process also. But now we add the defragment option on every drive right click menu then you not need to find the option.

Windows Key + R >regedit.exe > go to the

following registry…

HKEY_CLASSES_ROOT\Drive\shell

|

|

| Create new key |

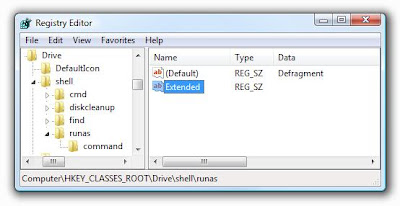

Create a new key under shell key named “runas” > change the value of “default”

> change the value to “Defragment” >Are you want to hide this menu item

behind the shift key right click menu > add a new string > named “Extended”

with no value.

|

| Now see the Magic |

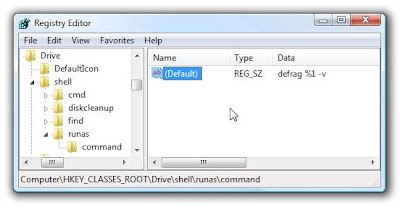

Now Create a new key under runas > named “Command” > set the below

value

degrag %1 -v

just doing after see the right

click menu and show others .

Thanks

SOMETHING ABOUT FRAGMENT

In the maintenance of file systems, defragmentation may be a method that reduces the number of fragmentation. It will this by physically organizing the contents of the mass memory device accustomed store files into the tiniest variety of contiguous regions (fragments). It additionally tries to make larger regions of free house exploitation compaction to impede the come of fragmentation. Some defragmentation utilities attempt to keep smaller files at intervals one directory along, as they're usually accessed in sequence.

Defragmentation is advantageous and relevant to file systems on mechanical device disk drives. The movement of the arduous drive's read/write heads over totally different areas of the disk once accessing fragmented files is slower, compared to accessing the complete contents of a non-fragmented file consecutive while not moving the read/write heads to hunt different fragments.

Causes of fragmentation

Fragmentation happens once the classification system cannot or won't apportion enough contiguous house to store a whole file as a unit, however instead puts components of it in gaps between different files (usually those gaps exist as a result of they erst command a file that the package has after deleted or as a result of the classification system allotted excess house for the come in the primary place). Larger files and bigger numbers of files additionally contribute to fragmentation and ensuant performance loss. Defragmentation tries to alleviate these issues.

Example[edit supply

File system fragmentation.svg

Consider the subsequent situation, as shown by the image on the right:

An otherwise blank disk has 5 files, A through E, every exploitation ten blocks of house (for this section, a block is associate computer memory unit of the filesystem; the block size is about once the disk is formatted and may be any size supported by the filesystem). On a blank disk, all of those files would be allotted one once the opposite (see example one within the image). If file B were to be deleted, there would be 2 options: mark the house for file B as empty to be used once more later, or move all the files once B so the empty house is at the top. Since moving the files may be time overwhelming if there have been several files which require to be affected, typically the empty house is solely left there, marked during a table as on the market for brand spanking new files (see example a pair of within the image). once a replacement file, F, is allotted requiring six blocks of house, it may be placed into the primary six blocks of the house that erst command file B, and therefore the four blocks following it'll stay on the market (see example three within the image). If another new file, G, is superimposed and desires solely four blocks, it might then occupy the house once F and before C (example four within the image). However, if file F has to be swollen, there square measure 2 choices, since the house right away following it's not available:

Move the file F to wherever it is created joined contiguous file of the new, larger size. this is able to not be attainable if the file is larger than the biggest contiguous house on the market. The file might even be thus massive that the operation would take associate undesirably long amount of your time.

Add a replacement block in different places, and indicate that F contains a second extent (see example five within the image). Repeat this many times and therefore the filesystem can have variety of tiny free segments scattered in several places, and a few files can have multiple extents. once a file has several extents like this, time interval for that file could become overly long thanks to all the random seeking the disk can ought to do once reading it.

Additionally, the idea of “fragmentation” isn't solely restricted to individual files that have multiple extents on the disk. as an example, a group of files commonly scan during a specific sequence (like files accessed by a program once it's loading, which may embody bound DLLs, varied resource files, the audio/visual media files during a game) is thought-about fragmented if they're not in successive load-order on the disk. The disk can ought to get indiscriminately to access them in sequence. Some teams of files could are originally put in within the correct sequence, however estrange with time as bound files at intervals the cluster square measure deleted. Updates square measure a standard reason for this, as a result of so as to update a file, most updaters typically delete the recent file 1st, then write a replacement, updated one in its place. However, most filesystems don't write the new come in constant physical place on the disk. this permits unrelated files to fill within the empty areas left behind. In Windows, an honest defragmenter can scan the Prefetch files to spot as several of those file teams as attainable and place the files at intervals them in access sequence. Another oft smart assumption is that files in any given folder square measure associated with one another and may well be accessed along.

To defragment a disk, defragmentation code (also called a "defragmenter") will solely move files around at intervals the free house on the market. this is often associate intensive operation and can't be performed on a filesystem with very little or no free house. throughout defragmentation, system performance are degraded, and it's best to depart the pc alone throughout the method so the defragmenter doesn't get confused by sudden changes to the filesystem. counting on the formula used it should or might not be advantageous to perform multiple passes. The reorganization concerned in defragmentation doesn't modification logical location of the files (defined as their location at intervals the directory structure).

Common countermeasures[edit supply

Partitioning

A common strategy to optimize defragmentation and to cut back the impact of fragmentation is to partition the arduous disk(s) during a method that separates partitions of the classification system that have additional|more} reads than writes from the more volatile zones wherever files square measure created and deleted oft. The directories that contain the users' profiles square measure changed perpetually (especially with the temporary worker directory and application cache making thousands of files that square measure deleted during a few days). If files from user profiles square measure prevailed an avid partition (as is often done on UNIX operating system counseled files systems, wherever it's usually keep within the /var partition), the defragmenter runs higher since it doesn't ought to influence all the static files from different directories. For partitions with comparatively very little write activity, defragmentation time greatly improves once the primary defragmentation, since the defragmenter can ought to defragment solely alittle variety of recent files within the future.

Offline defragmentation[edit supply

The presence of stabile system files, particularly a swap space, will impede defragmentation. These files is safely affected once the package isn't in use. as an example, ntfsresize moves these files to size associate NTFS partition. The tool PageDefrag might defragment Windows system files like the swap space and therefore the files that store the Windows written record by running at boot time before the user interface is loaded. Since Windows visual percept, the feature isn't absolutely supported and has not been updated.

In NTFS, as files square measure superimposed to the disk, the computer file Table (MFT) should grow to store the data for the new files. whenever the MFT can not be extended thanks to some file being within the method, the MFT can gain a fraction. In early versions of Windows, it couldn't be safely defragmented whereas the partition was mounted, and then Microsoft wrote a hardblock within the defragmenting API. However, since Windows XP, associate increasing variety of defragmenters square measure currently ready to defragment the MFT, as a result of the Windows defragmentation API has been improved and currently supports that move operation. Even with the enhancements, the primary four clusters of the MFT stay immovable by the Windows defragmentation API, leading to the very fact that some defragmenters can store the MFT in 2 fragments: the primary four clusters where they were placed once the disk was formatted, then the remainder of the MFT at the start of the disk (or where the defragmenter's strategy deems to be the most effective place).

User and performance issues[edit supply

In a wide selection of recent multi-user operational systems, a standard user cannot defragment the system disks since superuser (or "Administrator") access is needed to maneuver system files. to boot, file systems like NTFS square measure designed to decrease the probability of fragmentation. enhancements in fashionable arduous drives like RAM cache, quicker platter rotation speed, command queuing (SCSI TCQ/SATA NCQ), and bigger knowledge density cut back the negative impact of fragmentation on system performance to a point, tho' will increase in normally used knowledge quantities offset those edges. However, fashionable systems profit staggeringly from the massive disk capacities presently on the market, since part crammed disks fragment abundant but full disks, and on a high-capacity HDD, constant partition occupies a smaller vary of cylinders, leading to quicker seeks. However, the typical time interval will ne'er be below a 0.5 rotation of the platters, and platter rotation (measured in rpm) is that the speed characteristic of HDDs that has practised the slowest growth over the decades (compared to knowledge transfer rate and get time), thus minimizing the quantity of seeks remains useful in most storage-heavy applications. Defragmentation is simply that: guaranteeing that there's at the most one get per file, count solely the seeks to non-adjacent tracks.

When reading knowledge from a traditional mechanical device magnetic disc drive, the control should 1st position the top, comparatively slowly, to the track wherever a given fragment resides, then wait whereas the disk platter rotates till the fragment reaches the top.

Since disks supported non-volatile storage haven't any moving components, random access of a fraction doesn't suffer this delay, creating defragmentation to optimize access speed unessential. what is more, since flash memory is written to solely a restricted variety of times before it fails, defragmentation is really prejudicious (except within the mitigation of harmful failure).

Windows System Restore points could also be deleted throughout defragmenting/optimizing[edit supply | ]

Running most defragmenters and optimizers will cause the Microsoft Shadow Copy service to delete a number of the oldest restore points, even though the defragmenters/optimizers square measure engineered on Windows API. this is often thanks to Shadow Copy keeping track of some movements of massive files performed by the defragmenters/optimizers; once the whole disc space employed by shadow copies would exceed a specified threshold, older restore points square measure deleted till the limit isn't exceeded.

Defragmenting and optimizing[edit supply

Besides defragmenting program files, the defragmenting tool can even cut back the time it takes to load programs and open files. as an example, the Windows 9x defragmenter enclosed the Intel Application Launch Accelerator that optimized programs on the disk.The outer tracks of a tough disk have the next transfer rate than the inner tracks, so putting files on the outer tracks will increase performance.

Approach and defragmenters by file-system type[edit supply

FAT: DOS six.x and Windows 9x-systems go together with a defragmentation utility known as Defrag. The DOS version may be a restricted version of Norton SpeedDisk. The version that came with Windows 9x was accredited from Symantec Corporation, and therefore the version that came with Windows 2000 and XP is licensed from Condusiv Technologies.

NTFS was introduced with Windows National Trust three.1, however the NTFS filesystem driver didn't embody any defragmentation capabilities.[citation needed] In Windows National Trust four.0, defragmenting genus Apis were introduced that third-party tools might use to perform defragmentation tasks; but, no defragmentation code was enclosed. In Windows 2000, Windows XP and Windows Server 2003, Microsoft enclosed a defragmentation tool supported Diskeeper[ that created use of the defragmentation genus Apis and was a snap-in for laptop Management. In Windows visual percept, Windows seven and Windows eight, Microsoft seems to possess written their own defragmenter[citation needed] that has no visual diskmap and isn't a part of laptop Management. There {are also|also square measure|are} variety of free and business third-party defragmentation product are on the market for Microsoft Windows.

BSD UFS and notably FreeBSD uses an inside reallocator that seeks to cut back fragmentation right within the moment once the data is written to disk.[citation needed] This effectively controls system degradation once extended use.

Linux ext2, ext3, and ext4: very similar to UFS, these filesystems use allocation techniques designed to stay fragmentation in restraint in any respect times. As a result, defragmentation isn't required within the overwhelming majority of cases. ext2 uses associate offline defragmenter known as e2defrag, that doesn't work with its successor ext3. However, different programs, or filesystem-independent ones, could also be accustomed defragment associate ext3 filesystem. ext4 is somewhat backward compatible with ext3, and therefore has usually constant quantity of support from defragmentation programs. In apply there aren't any stable and well-integrated defragmentation solutions for UNIX operating system, and therefore no defragmentation is performed.

Linux Btrfs

VxFS has the fsadm utility that features defrag operations.

JFS has the defragfs utility on IBM operational systems.

HFS and (Mac OS X) introduced in 1998 variety of optimizations to the allocation algorithms in an effort to defragment files whereas they're being accessed while not a separate defragmenter.[citation needed] If the filesystem becomes fragmented, the sole thanks to defragment it's to use a utility like Coriolis System's iDefrag,[citation needed] or to wipe the Winchester drive fully and install the system from scratch.

WAFL in NetApp's ONTAP seven.2 package contains a command known as apportion that's designed to defragment massive files.

XFS provides an internet defragmentation utility known as xfs_fsr.

SFS processes the defragmentation feature in nearly fully unsettled method (apart from the placement it's operating on), thus defragmentation is stopped and commenced instantly.[citation needed]

ADFS, the classification system employed by reduced instruction set computer OS and earlier fruit Computers, keeps file fragmentation in restraint while not requiring manual defragmentation.

Defragmentation is advantageous and relevant to file systems on mechanical device disk drives. The movement of the arduous drive's read/write heads over totally different areas of the disk once accessing fragmented files is slower, compared to accessing the complete contents of a non-fragmented file consecutive while not moving the read/write heads to hunt different fragments.

Causes of fragmentation

Fragmentation happens once the classification system cannot or won't apportion enough contiguous house to store a whole file as a unit, however instead puts components of it in gaps between different files (usually those gaps exist as a result of they erst command a file that the package has after deleted or as a result of the classification system allotted excess house for the come in the primary place). Larger files and bigger numbers of files additionally contribute to fragmentation and ensuant performance loss. Defragmentation tries to alleviate these issues.

Example[edit supply

File system fragmentation.svg

Consider the subsequent situation, as shown by the image on the right:

An otherwise blank disk has 5 files, A through E, every exploitation ten blocks of house (for this section, a block is associate computer memory unit of the filesystem; the block size is about once the disk is formatted and may be any size supported by the filesystem). On a blank disk, all of those files would be allotted one once the opposite (see example one within the image). If file B were to be deleted, there would be 2 options: mark the house for file B as empty to be used once more later, or move all the files once B so the empty house is at the top. Since moving the files may be time overwhelming if there have been several files which require to be affected, typically the empty house is solely left there, marked during a table as on the market for brand spanking new files (see example a pair of within the image). once a replacement file, F, is allotted requiring six blocks of house, it may be placed into the primary six blocks of the house that erst command file B, and therefore the four blocks following it'll stay on the market (see example three within the image). If another new file, G, is superimposed and desires solely four blocks, it might then occupy the house once F and before C (example four within the image). However, if file F has to be swollen, there square measure 2 choices, since the house right away following it's not available:

Move the file F to wherever it is created joined contiguous file of the new, larger size. this is able to not be attainable if the file is larger than the biggest contiguous house on the market. The file might even be thus massive that the operation would take associate undesirably long amount of your time.

Add a replacement block in different places, and indicate that F contains a second extent (see example five within the image). Repeat this many times and therefore the filesystem can have variety of tiny free segments scattered in several places, and a few files can have multiple extents. once a file has several extents like this, time interval for that file could become overly long thanks to all the random seeking the disk can ought to do once reading it.

Additionally, the idea of “fragmentation” isn't solely restricted to individual files that have multiple extents on the disk. as an example, a group of files commonly scan during a specific sequence (like files accessed by a program once it's loading, which may embody bound DLLs, varied resource files, the audio/visual media files during a game) is thought-about fragmented if they're not in successive load-order on the disk. The disk can ought to get indiscriminately to access them in sequence. Some teams of files could are originally put in within the correct sequence, however estrange with time as bound files at intervals the cluster square measure deleted. Updates square measure a standard reason for this, as a result of so as to update a file, most updaters typically delete the recent file 1st, then write a replacement, updated one in its place. However, most filesystems don't write the new come in constant physical place on the disk. this permits unrelated files to fill within the empty areas left behind. In Windows, an honest defragmenter can scan the Prefetch files to spot as several of those file teams as attainable and place the files at intervals them in access sequence. Another oft smart assumption is that files in any given folder square measure associated with one another and may well be accessed along.

To defragment a disk, defragmentation code (also called a "defragmenter") will solely move files around at intervals the free house on the market. this is often associate intensive operation and can't be performed on a filesystem with very little or no free house. throughout defragmentation, system performance are degraded, and it's best to depart the pc alone throughout the method so the defragmenter doesn't get confused by sudden changes to the filesystem. counting on the formula used it should or might not be advantageous to perform multiple passes. The reorganization concerned in defragmentation doesn't modification logical location of the files (defined as their location at intervals the directory structure).

Common countermeasures[edit supply

Partitioning

A common strategy to optimize defragmentation and to cut back the impact of fragmentation is to partition the arduous disk(s) during a method that separates partitions of the classification system that have additional|more} reads than writes from the more volatile zones wherever files square measure created and deleted oft. The directories that contain the users' profiles square measure changed perpetually (especially with the temporary worker directory and application cache making thousands of files that square measure deleted during a few days). If files from user profiles square measure prevailed an avid partition (as is often done on UNIX operating system counseled files systems, wherever it's usually keep within the /var partition), the defragmenter runs higher since it doesn't ought to influence all the static files from different directories. For partitions with comparatively very little write activity, defragmentation time greatly improves once the primary defragmentation, since the defragmenter can ought to defragment solely alittle variety of recent files within the future.

Offline defragmentation[edit supply

The presence of stabile system files, particularly a swap space, will impede defragmentation. These files is safely affected once the package isn't in use. as an example, ntfsresize moves these files to size associate NTFS partition. The tool PageDefrag might defragment Windows system files like the swap space and therefore the files that store the Windows written record by running at boot time before the user interface is loaded. Since Windows visual percept, the feature isn't absolutely supported and has not been updated.

In NTFS, as files square measure superimposed to the disk, the computer file Table (MFT) should grow to store the data for the new files. whenever the MFT can not be extended thanks to some file being within the method, the MFT can gain a fraction. In early versions of Windows, it couldn't be safely defragmented whereas the partition was mounted, and then Microsoft wrote a hardblock within the defragmenting API. However, since Windows XP, associate increasing variety of defragmenters square measure currently ready to defragment the MFT, as a result of the Windows defragmentation API has been improved and currently supports that move operation. Even with the enhancements, the primary four clusters of the MFT stay immovable by the Windows defragmentation API, leading to the very fact that some defragmenters can store the MFT in 2 fragments: the primary four clusters where they were placed once the disk was formatted, then the remainder of the MFT at the start of the disk (or where the defragmenter's strategy deems to be the most effective place).

User and performance issues[edit supply

In a wide selection of recent multi-user operational systems, a standard user cannot defragment the system disks since superuser (or "Administrator") access is needed to maneuver system files. to boot, file systems like NTFS square measure designed to decrease the probability of fragmentation. enhancements in fashionable arduous drives like RAM cache, quicker platter rotation speed, command queuing (SCSI TCQ/SATA NCQ), and bigger knowledge density cut back the negative impact of fragmentation on system performance to a point, tho' will increase in normally used knowledge quantities offset those edges. However, fashionable systems profit staggeringly from the massive disk capacities presently on the market, since part crammed disks fragment abundant but full disks, and on a high-capacity HDD, constant partition occupies a smaller vary of cylinders, leading to quicker seeks. However, the typical time interval will ne'er be below a 0.5 rotation of the platters, and platter rotation (measured in rpm) is that the speed characteristic of HDDs that has practised the slowest growth over the decades (compared to knowledge transfer rate and get time), thus minimizing the quantity of seeks remains useful in most storage-heavy applications. Defragmentation is simply that: guaranteeing that there's at the most one get per file, count solely the seeks to non-adjacent tracks.

When reading knowledge from a traditional mechanical device magnetic disc drive, the control should 1st position the top, comparatively slowly, to the track wherever a given fragment resides, then wait whereas the disk platter rotates till the fragment reaches the top.

Since disks supported non-volatile storage haven't any moving components, random access of a fraction doesn't suffer this delay, creating defragmentation to optimize access speed unessential. what is more, since flash memory is written to solely a restricted variety of times before it fails, defragmentation is really prejudicious (except within the mitigation of harmful failure).

Windows System Restore points could also be deleted throughout defragmenting/optimizing[edit supply | ]

Running most defragmenters and optimizers will cause the Microsoft Shadow Copy service to delete a number of the oldest restore points, even though the defragmenters/optimizers square measure engineered on Windows API. this is often thanks to Shadow Copy keeping track of some movements of massive files performed by the defragmenters/optimizers; once the whole disc space employed by shadow copies would exceed a specified threshold, older restore points square measure deleted till the limit isn't exceeded.

Defragmenting and optimizing[edit supply

Besides defragmenting program files, the defragmenting tool can even cut back the time it takes to load programs and open files. as an example, the Windows 9x defragmenter enclosed the Intel Application Launch Accelerator that optimized programs on the disk.The outer tracks of a tough disk have the next transfer rate than the inner tracks, so putting files on the outer tracks will increase performance.

Approach and defragmenters by file-system type[edit supply

FAT: DOS six.x and Windows 9x-systems go together with a defragmentation utility known as Defrag. The DOS version may be a restricted version of Norton SpeedDisk. The version that came with Windows 9x was accredited from Symantec Corporation, and therefore the version that came with Windows 2000 and XP is licensed from Condusiv Technologies.

NTFS was introduced with Windows National Trust three.1, however the NTFS filesystem driver didn't embody any defragmentation capabilities.[citation needed] In Windows National Trust four.0, defragmenting genus Apis were introduced that third-party tools might use to perform defragmentation tasks; but, no defragmentation code was enclosed. In Windows 2000, Windows XP and Windows Server 2003, Microsoft enclosed a defragmentation tool supported Diskeeper[ that created use of the defragmentation genus Apis and was a snap-in for laptop Management. In Windows visual percept, Windows seven and Windows eight, Microsoft seems to possess written their own defragmenter[citation needed] that has no visual diskmap and isn't a part of laptop Management. There {are also|also square measure|are} variety of free and business third-party defragmentation product are on the market for Microsoft Windows.

BSD UFS and notably FreeBSD uses an inside reallocator that seeks to cut back fragmentation right within the moment once the data is written to disk.[citation needed] This effectively controls system degradation once extended use.

Linux ext2, ext3, and ext4: very similar to UFS, these filesystems use allocation techniques designed to stay fragmentation in restraint in any respect times. As a result, defragmentation isn't required within the overwhelming majority of cases. ext2 uses associate offline defragmenter known as e2defrag, that doesn't work with its successor ext3. However, different programs, or filesystem-independent ones, could also be accustomed defragment associate ext3 filesystem. ext4 is somewhat backward compatible with ext3, and therefore has usually constant quantity of support from defragmentation programs. In apply there aren't any stable and well-integrated defragmentation solutions for UNIX operating system, and therefore no defragmentation is performed.

Linux Btrfs

VxFS has the fsadm utility that features defrag operations.

JFS has the defragfs utility on IBM operational systems.

HFS and (Mac OS X) introduced in 1998 variety of optimizations to the allocation algorithms in an effort to defragment files whereas they're being accessed while not a separate defragmenter.[citation needed] If the filesystem becomes fragmented, the sole thanks to defragment it's to use a utility like Coriolis System's iDefrag,[citation needed] or to wipe the Winchester drive fully and install the system from scratch.

WAFL in NetApp's ONTAP seven.2 package contains a command known as apportion that's designed to defragment massive files.

XFS provides an internet defragmentation utility known as xfs_fsr.

SFS processes the defragmentation feature in nearly fully unsettled method (apart from the placement it's operating on), thus defragmentation is stopped and commenced instantly.[citation needed]

ADFS, the classification system employed by reduced instruction set computer OS and earlier fruit Computers, keeps file fragmentation in restraint while not requiring manual defragmentation.